Use ElasticSearch to search and use external sources, like Wikipedia, inside eXo Platform

Since its version 4, eXo Platform has added a new unified search, which greatly improves its search capabilities. All the platform’s resources (contents, files, wiki pages, etc.) can now be easily found from a single and centralized location.

Besides these out-of-the-box capabilities, a new API allows creation of custom search connectors in order to extend the search scope and enrich the results. This blog post explains how to implement and configure such a connector.

For this blog post, the search connector will retrieve data indexed by ElasticSearch, a highly powerful and easy to use search engine. It is of course up to you to decide what your search connector returns (data indexed by another search engine, data from a database, other custom data stored in eXo, etc.)

ElasticSearch

The first step if we want to use ElasticSearch is to install and configure it! The only thing to do here is to download it, extract it, and start it with:

bin/elasticsearch.sh -f

Index data in ElasticSearch

As is, ElasticSearch is empty, no data has been indexed. So we need to feed it. For this purpose, we will use the Wikipedia River plugin. A river is an ElasticSearch component which feeds ElasticSearch with data to index. The Wikipedia River simply feeds ElasticSearch with Wikipedia pages.

After stopping your ElasticSearch server you can install the plugin with:

bin/plugin -install elasticsearch/elasticsearch-river-wikipedia/1.3.0

After restarting ElasticSearch you should see logs similar to the following:

[2013-11-27 11:55:48,716][INFO ][node] [It, the Living Colossus] version[0.90.7], pid[14776], build[36897d0/2013-11-13T12:06:54Z]

[2013-11-27 11:55:48,716][INFO ][node] [It, the Living Colossus] initializing ...

[2013-11-27 11:55:48,725][INFO ][plugins] [It, the Living Colossus] loaded [river-wikipedia], sites []

[2013-11-27 11:55:50,632][INFO ][node] [It, the Living Colossus] initialized

[2013-11-27 11:55:50,632][INFO ][node] [It, the Living Colossus] starting ...

[2013-11-27 11:55:50,718][INFO ][transport] [It, the Living Colossus] bound_address {inet[/0:0:0:0:0:0:0:0:9300]}, publish_address {inet[/192.168.0.5:9300]}This ensures that the Wikipedia River plugin is correctly installed ().

We can now start indexing Wikipedia pages in ElasticSearch by creating the river with a REST call (we are using curl here; feel free to use your favorite tool):

curl -XPUT localhost:9200/_river/my_river/_meta -d '

{

"type" : "wikipedia"

}

'A lot of data is now being indexed by ElasticSearch (yes, Wikipedia is a huge source of data :)). You can check this by executing a search with:

curl -XGET 'http://localhost:9200/_search?q=test'

Warning: the Wikipedia River will index a lot of data. You should stop the river after a few minutes to avoid filling your entire disk space ;-). This can be done by deleting the river with:

curl -XDELETE localhost:9200/_river/my_river

Now that we have data indexed by ElasticSearch, let’s dig into the eXo search connector!

eXo search connector

A search connector is a simple class that extends and implements the “search” method:

package org.exoplatform.search.elasticsearch;

import ...

public class ElasticSearchConnector extends SearchServiceConnector {

public ElasticSearchConnector(InitParams initParams) {

super(initParams);

}

@Override

public Collection<SearchResult> search(SearchContext context, String query, Collection<String> sites, int offset, int limit, String sort, String order) {

// Fetch data

}

}It needs to be declared in the eXo configuration, either in an extension or directly in the jar which will contain the connector class. Let’s go for the jar method:

- add the class in your jar

- add a file named in in your jar with the following content (the “type” tag contains the FQN of your connector class):

<configuration

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.exoplatform.org/xml/ns/kernel_1_2.xsd http://www.exoplatform.org/xml/ns/kernel_1_2.xsd"

xmlns="http://www.exoplatform.org/xml/ns/kernel_1_2.xsd">

<external-component-plugins>

<target-component>org.exoplatform.commons.api.search.SearchService</target-component>

<component-plugin>

<name>ElasticSearchConnector</name>

<set-method>addConnector</set-method>

<type>org.exoplatform.search.elasticsearch.ElasticSearchConnector</type>

<description>ElasticSearch Connector</description>

<init-params>

<properties-param>

<name>constructor.params</name>

<property name="searchType" value="wikipedia"/>

<property name="displayName" value="Wikipedia"/>

</properties-param>

</init-params>

</component-plugin>

</external-component-plugins>

</configuration>We now have the skeleton of our search connector. The last step is to implement the search method.

Fetching results from ElasticSearch

We need to call ElasticSearch in order to fetch Wikipedia pages based on the input parameters of the search (query text, offset, limit, sort field, sort order). ElasticSearch provides a Java Client API (TransportClient). Sadly, it depends on Lucene artifacts, and since eXo Platform already embeds Lucene artifacts that are not necessarily in the same version as the ones needed by ElasticSearch, it can cause conflicts. Instead we will directly use the REST API:

package org.exoplatform.search.elasticsearch;

import org.apache.commons.io.IOUtils;

import org.apache.http.HttpResponse;

import org.apache.http.client.HttpClient;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.entity.StringEntity;

import org.apache.http.impl.client.DefaultHttpClient;

import org.exoplatform.commons.api.search.SearchServiceConnector;

import org.exoplatform.commons.api.search.data.SearchContext;

import org.exoplatform.commons.api.search.data.SearchResult;

import org.exoplatform.container.xml.InitParams;

import org.json.simple.JSONArray;

import org.json.simple.JSONObject;

import org.json.simple.parser.JSONParser;

import java.io.StringWriter;

import java.util.*;

public class ElasticSearchConnector extends SearchServiceConnector {

private Map<String, String> sortMapping = new HashMap<String, String>();

public ElasticSearchConnector(InitParams initParams) {

super(initParams);

sortMapping.put("date", "title"); // no date field on wikipedia results

sortMapping.put("relevancy", "_score");

sortMapping.put("title", "title");

}

@Override

public Collection<SearchResult> search(SearchContext context, String query, Collection<String> sites, int offset, int limit, String sort, String order) {

Collection<SearchResult> results = new ArrayList<SearchResult>();

String esQuery = "{\n" +

" \"from\" : " + offset + ", \"size\" : " + limit + ",\n" +

" \"sort\" : [\n" +

" { \"" + sortMapping.get(sort) + "\" : {\"order\" : \"" + order + "\"}}\n" +

" ],\n" +

" \"query\": {\n" +

" \"filtered\" : {\n" +

" \"query\" : {\n" +

" \"query_string\" : {\n" +

" \"query\" : \"" + query + "\"\n" +

" }\n" +

" }\n" +

" }\n" +

" },\n" +

" \"highlight\" : {\n" +

" \"fields\" : {\n" +

" \"text\" : {\"fragment_size\" : 150, \"number_of_fragments\" : 3}\n" +

" }\n" +

" }\n" +

"}";

try {

HttpClient client = new DefaultHttpClient();

HttpPost request = new HttpPost("http://localhost:9200/_search");

StringEntity input = new StringEntity(esQuery);

request.setEntity(input);

HttpResponse response = client.execute(request);

StringWriter writer = new StringWriter();

IOUtils.copy(response.getEntity().getContent(), writer, "UTF-8");

String jsonResponse = writer.toString();

JSONParser parser = new JSONParser();

Map json = (Map)parser.parse(jsonResponse);

JSONObject jsonResult = (JSONObject) json.get("hits");

JSONArray jsonHits = (JSONArray) jsonResult.get("hits");

for(Object jsonHit : jsonHits) {

JSONObject hitSource = (JSONObject) ((JSONObject) jsonHit).get("_source");

String title = (String) hitSource.get("title");

JSONObject hitHighlights = (JSONObject) ((JSONObject) jsonHit).get("highlight");

JSONArray hitHighlightsTexts = (JSONArray) hitHighlights.get("text");

String text = "";

for(Object hitHighlightsText : hitHighlightsTexts) {

text += (String) hitHighlightsText + " ... ";

}

results.add(new SearchResult(

"http://wikipedia.org",

title,

text,

"",

"http://upload.wikimedia.org/wikipedia/commons/thumb/7/77/Wikipedia_svg_logo.svg/45px-Wikipedia_svg_logo.svg.png",

new Date().getTime(),

1

));

}

} catch (Exception e) {

e.printStackTrace();

}

return results;

}

}Requests and responses are full JSON. You can find more details about ElasticSearch query syntax in their documentation. The important point here about the search connector is that each result has to be a SearchResult object returned in a collection.

Deploy it in eXo, and enjoy!

We can now deploy our jar (which contains the SearchConnector class and the XML configuration file) in the libs of the application server ( of Tomcat for example) and start eXo.

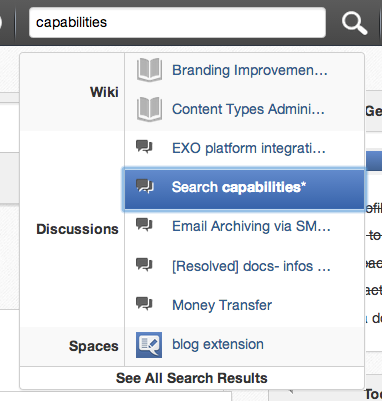

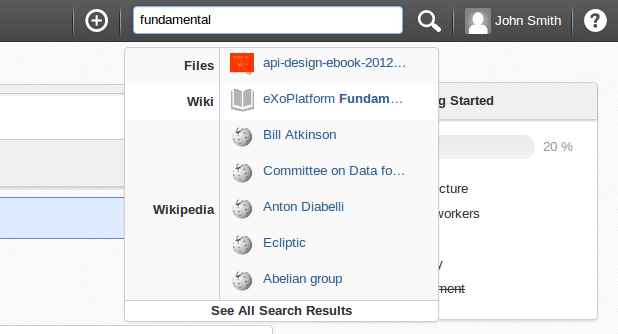

A search using the quick search in the toolbar now retrieves contents from Wikipedia:

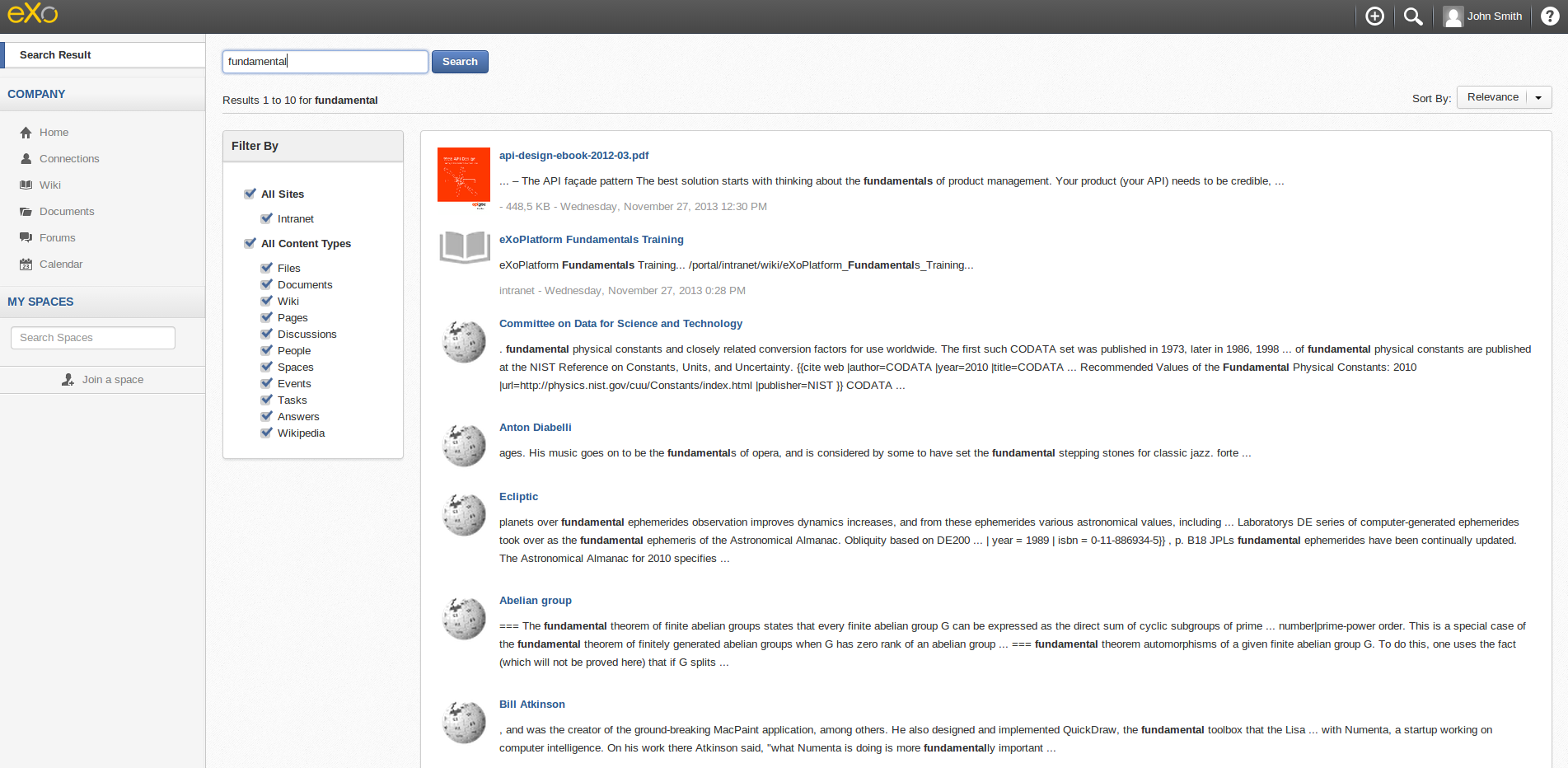

When the unified search screen is displayed, we can see that a new Wikipedia filter is listed, and our search results contain some Wikipedia pages:

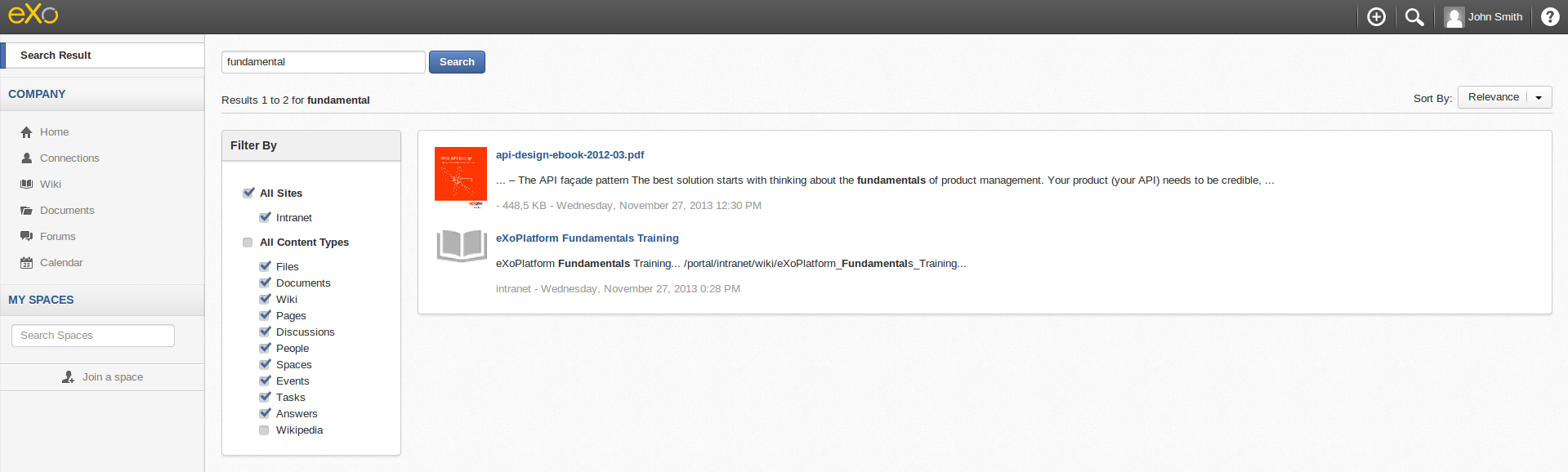

If you don’t want to see Wikipedia contents in your results, simply uncheck the filter:

The code source is available here, as a Maven project.

Learn more about this project and what you can do with eXo Platform; join the eXo tribe!